Over the past years, I have gotten deeper and deeper into 3d-printing. One thing that fascinates me a lot is how different it is from other manufacturing methods. And how this, in turn, means a completely different design philosophy is needed to create good designs for 3d-printing.

As such, I have been collecting the little tricks and rules for designing well-printable parts. And of course I am always on the hunt for more. In this blog post I want to share all that I have learned.

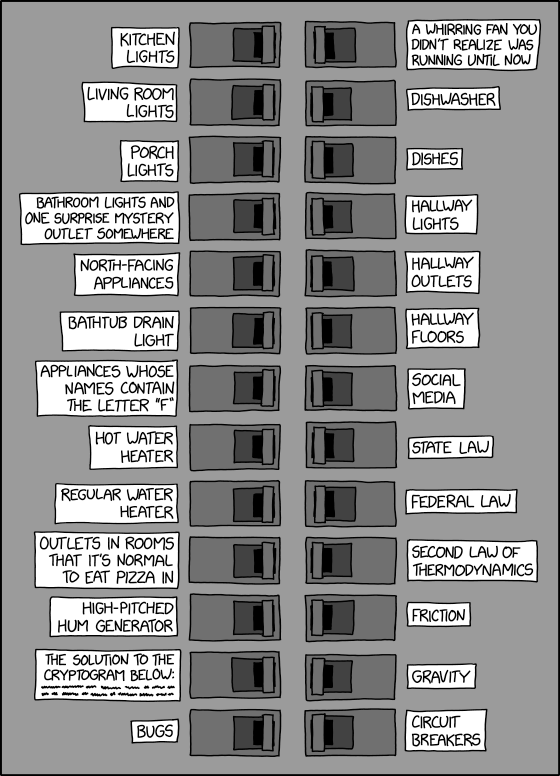

![It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.] It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.]](https://imgs.xkcd.com/comics/datetime.png)